Best of 2021 – 4 Expert-Level Things I Wish I’d Known About Kubernetes

As we close out 2021, we at Container Journal wanted to highlight the most popular articles of the year. Following is the third in our series of the Best of 2021.

InfluxDB Cloud customers run a variety of applications on top of our development platform, from IoT applications to financial applications to infrastructure and server monitoring. These types of applications generate significant quantities of data. However, data has “gravity”—meaning that moving terabytes around the internet or even between regions within the same cloud provider can be cost- and time-prohibitive. Both can become painful factors when moving data for these applications. For this reason, it is important for our customers to have InfluxDB Cloud available in the region and cloud service provider of their choice.

We chose to implement InfluxDB Cloud as a Kubernetes application in order to allow us to meet the multi-cloud and multi-region needs of our customers. Kubernetes provides us with a cloud abstraction layer that allows us to (more or less) write a single application to run everywhere.

While it is powerful, Kubernetes is a relatively new technology. We’ve found incredible success, but it has taken effort. Here are four things I wish I’d known about Kubernetes when we started.

1. Cloud-native applications and binaries are universes apart.

The technical differences between writing a single binary server or stack of interrelated single binary servers and a Kubernetes application are obvious on the surface. Transitioning the mindset of an engineering team between these universes is work that should be accounted for in such efforts.

For example, it is typical to pack as many processes as possible into your single binary server. This is the opposite approach to take in the Kubernetes universe, where you containerize each process into its own container and run armies of pods that can scale dynamically to serve the workloads.

Similarly, in an open source software (OSS) environment (InfluxDB also has an open source version), processes can share memory or disk space. However, once you move to a cloud-native application, your processes may not even live in the same Kubernetes node. It’s improbable that your Kubernetes processes will be able to exchange memory or drop files for each other to read.

When maintaining a single binary server, developers write code to keep the server running at all costs; for example, code to recover from unexpected errors, resetting state and keep serving. In Kubernetes, we do the opposite. If we encounter unexpected errors, our code reports that the pod is unhealthy and lets Kubernetes restart the pod in a clean state, hopefully after a clean shutdown, meaning that it finishes any work that it can before being cleaned up.

Overall, we found that the difference between OSS code and cloud code was so significant that it no longer made sense to maintain a common code base. We use some CI tools to ensure that the APIs between our OSS and Cloud versions do not diverge, but since we stopped trying to maintain a common code base, the velocity of both projects noticeably improved.

2. There are more metrics than you’ll ever be able to handle.

With Kubernetes, the problem with observability is not the lack of metrics—the dilemma is finding a way to locate a signal in all the noise.

It took us many iterations of data collection, data processing and dashboarding to land on a set of RED, USE, SLO and other dashboards and metrics that helped us understand the state of the production services we were offering. I would strongly recommend budgeting plenty of time for iterating on metrics collection, alerts, dashboards and SLIs/SLOs. This is not a side project but a core element to managing multiple Kubernetes clusters.

3. Set up synthetic monitoring.

Despite, or maybe because of, the reams of operational data flowing out of our production Kubernetes clusters, inferring the quality of the user experience that your application is providing can be difficult, especially at the initial stages of being live in production. Our solution for this was augmenting our monitoring efforts by implementing “synthetic monitoring.” As a development platform, the quality of the user experience is all about the performance and reliability of the API. Synthetic monitoring entails running an application externally that exercises the API in a way similar to how users interact with the application.

Originally, our synthetic monitoring did a better job of providing us critical feedback than our metrics-based monitoring, which meant that we had work to do to improve our monitoring and alerts. After a while, our metrics-based monitoring surpassed our synthetic monitoring in terms of alerting us to potential problems in production and, of course, allowing us to diagnose and fix issues, which synthetic monitoring cannot do.

Our synthetic monitoring lives on as a complement to our metrics-based monitoring by providing an objective view regarding the quality of service we are providing to customers. This is especially useful during incidents.

4. Multi-cloud continuous delivery is hard.

As developers, our users are very demanding with regard to availability. One of the core problems that we had initially was that during deployments, Kubernetes would unexpectedly shut down certain services and return a 500 or 503 error. These errors would end up as failed API calls for our users. Having a database at the core of our offering means that we cannot simply retry on server errors, as we don’t have a way to know if there are potential side effects regarding the query that the customer is running.

When you are continuously deploying, this means customers are continuously experiencing this disruption. It was a significant effort to write code throughout the platform to create super smooth deployments that minimize disruption during deployments.

Additionally, the cloud service providers do not offer uniform environments, capabilities and services. Therefore, you have to find a way to manage these differences in your deployments in a maintainable manner.

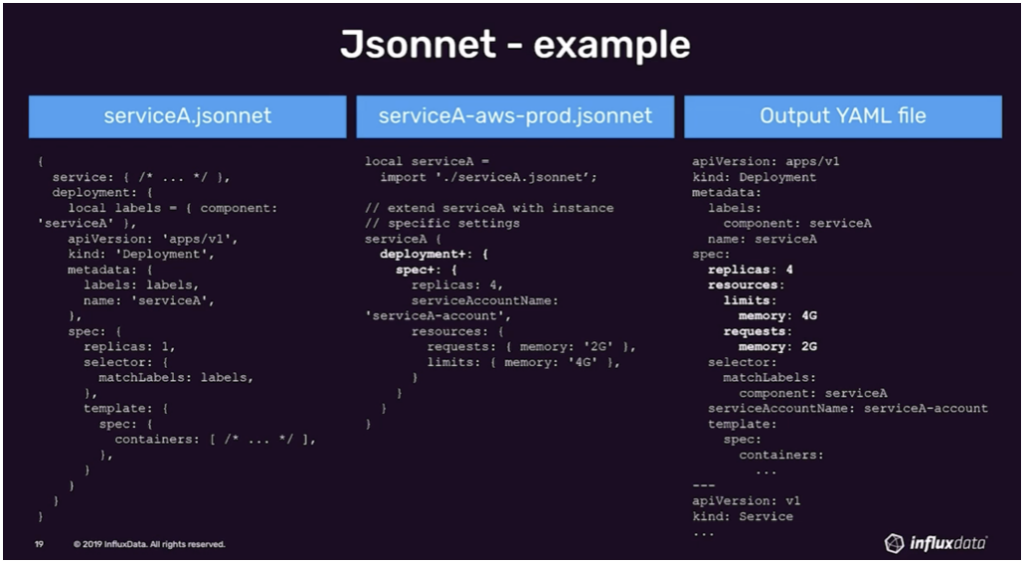

We chose a combination of Jsonnet and Argo to solve this problem. Jsonnet imposes a level of extra complexity, but it turned out to be a critical tool for managing multi-cloud deployments.

Bringing it all together

Kubernetes has helped us scale our platform in a way no other technology available today can. Our customers can use the platform in a totally elastic, pay-as-you-go manner. They can run the database and compute resources as close as possible to where they are generating their data and it helps them manage the gravity of their data.

Kubernetes allows us to turn services up to five times faster than we could with our OSS solution. We’ve proven that you can use Kubernetes as a cloud abstraction layer at scale—but it did take effort, and there were some pain points along the way. Hopefully, you’ll be able to use our learnings as you embark on your own Kubernetes journey.

To hear more about cloud-native topics, join the Cloud Native Computing Foundation and the cloud-native community at KubeCon+CloudNativeCon North America 2021 – October 11-15, 2021