CAST AI announced today at the KubeCon + CloudNativeCon 2024 conference that it has added a Container Live Migration capability that enables the movement and resizing of stateful workloads across Kubernetes clusters.

Additionally, the company is making generally available AI Enabler, a tool that enables IT teams to direct queries to the most cost-effective large language model (LLM).

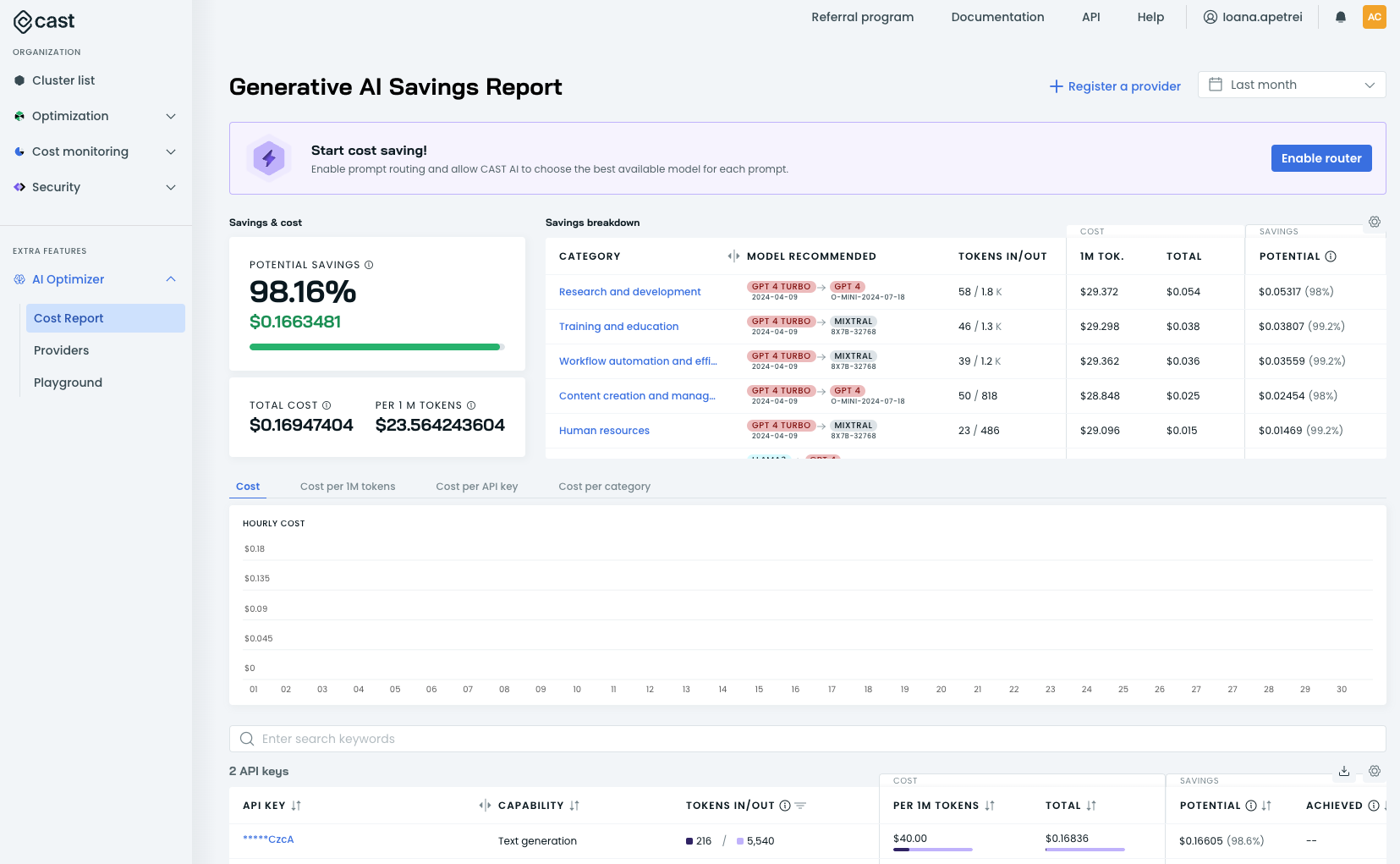

Initially launched earlier this year, AI Optimizer leverages an LLM Router CAST AI provides, to analyze users and their associated application programming interface (API) keys, overall usage patterns, and the balance of input versus output tokens to determine which instance of a graphical processor unit (GPU) in the cloud is running an LLM most efficiently.

The CAST AI Playground, a testing resource to compare LLM performance and cost, further makes it possible to benchmark and customize configurations.

Laurent Gil, chief product officer for CAST AI, said both of these latest additions extend the capabilities of a platform that makes use of machine learning algorithms and other forms of AI to automatically rightsize Kubernetes environments.

AI Enabler addresses a critical issue that has arisen as more AI models are deployed on Kubernetes clusters, added Gil. Many of the decisions concerning which LLM to deploy have been based more on personal preference and general accessibility than cost and performance concerns, he added.

Similarly, the Container Live Migration capability that CAST AI has developed reduces costs by giving IT operations teams more control over how applications are deployed. While containers are ripped and replaced all the time, containers running stateful workloads could not previously be combined. The Contain Live Migration capability can not only be used to facilitate backup and recovery without incurring any downtime but also enables IT teams to resize containers as the overall application environments grow and expand, said Gil.

Container Live Migration, in effect, brings a capability that is widely employed in virtual machine environments into the realm of containers, he added.

It’s not clear how IT teams are optimizing the management of what is often becoming fleets of Kubernetes clusters, but as organizations become more sensitive to cost there is a pressing need to optimize those environments. In theory, Kubernetes environments are supposed to scale up and down as application requirements change. In practice, developers are more concerned about ensuring application availability than cost, so there is a natural tendency to overprovision IT infrastructure.

As the number of Kubernetes clusters deployed in production environments continues to increase steadily, more attention is starting to be paid to cost optimization. IT teams are under considerable pressure to rein in costs in an uncertain economic environment, noted Gil. A recent CAST AI analysis found that, on average, IT teams are only using 13% of provisioned CPUs and 20% of memory when deploying clusters that have 50 to 1,000 processors.

Many of those IT teams are, as a result, embracing platform engineering as a methodology for applying best DevOps practices at scale to rein in those costs. There would also be increased adoption of Kubernetes in general if the platform was easier to manage cost-effectively. The only way to close that gap, of course, is to use platforms such as CAST AI to automate as many routine management tasks as possible, based on the level of performance that needs to be attained and maintained within a defined IT budget.