CAST AI Adds Tools to Optimize Kubernetes Clusters

At the Kubecon + CloudNativeCon conference, CAST AI today added an Automated Workload Rightsizing tool to ensure Kubernetes workloads are both efficiently sized and managed in a way that reduce costs along with a PrecisionPack scheduler that make use of a bin-packing algorithm to ensure pods run on specific nodes.

Laurent Gil, chief product officer of CAST AI, said both offerings can be used in isolation alongside the Autoscaler, Spot Instance Management and Cost Reporting Suite of tools the company provides that employ machine learning algorithms and rules to optimize the management of IT infrastructure.

Fresh from raising an additional $35 million in funding, CAST AI is now extending its reach to optimize Kubernetes environments that IT teams are finding challenging to manage cost-effectively at scale, said Gil.

Despite the fact that Kubernetes clusters are designed to make it possible to programmatically scale IT infrastructure resources up and down as needed, developers tend to routinely request the maximum amount of infrastructure resources available to ensure application availability. In other cases, they specify the default setting simply because the cost of IT infrastructure is largely opaque to them.

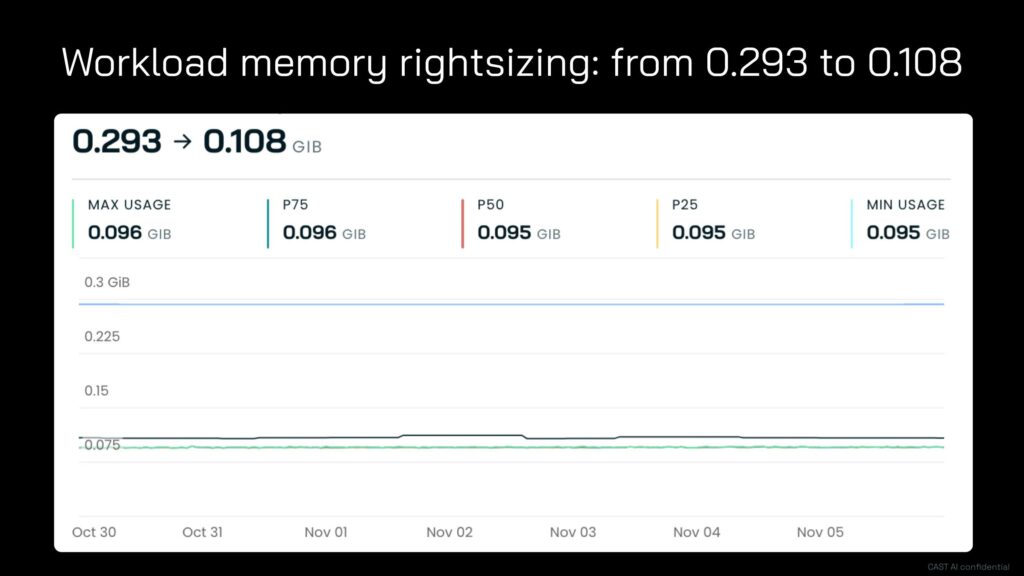

The Automated Workload Rightsizing tool provided by CAST AI monitors actual resource consumption for several days and then uses those metrics to rightsize a cloud-native application automatically, said Gil. That capability then makes it possible for IT teams to reassign those resources to other applications, he added.

The PrecisionPack scheduler, meanwhile, makes it possible to ensure higher levels of availability by allowing pods to be assigned to a specific application.

As the number of Kubernetes clusters deployed in production environments continues to increase steadily, more attention is starting to be paid to cost optimization. IT teams are under considerable pressure to rein in costs in an uncertain economic environment, noted Gil.

Many organizations are also embracing platform engineering as a methodology for managing DevOps workflows at scale as part of an effort to lower the total cost of application development and deployment.

The challenge, of course, is that Kubernetes is the most powerful and complex IT platform to find its way into enterprise IT environments in recent memory. The level of expertise required to optimize Kubernetes environments is still hard to find and retain. The only way to narrow that gap is to provide IT teams with tools that automate processes that would otherwise require them to constantly monitor individual Kubernetes pods and nodes.

Arguably, there would be greater adoption of Kubernetes if the platform was simpler to manage. Kubernetes itself was designed by software engineers for other software engineers. Despite the rise of DevOps to programmatically manage IT environments, most IT teams are still made up of administrators who have limited programming expertise.

One way or another, however, it will become easier to manage Kubernetes clusters at higher levels of abstractions that IT administrators can master. The issue now is determining how long that might take as the number of cloud-native applications that require Kubernetes clusters continues to increase steadily.