CloudBolt Software and StormForge have allied to make it simpler for IT teams to reduce Kubernetes costs by applying best FinOps practices.

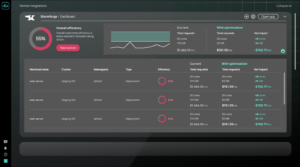

StormForge has developed a platform for automating the management of Kubernetes resources, and it is now being integrated with the IT operations platform developed by CloudBolt. Under the terms of the alliance, the machine learning algorithms that StormForge uses to optimize Kubernetes clusters will be fed into CloudBolt’s Augmented FinOps tools to surface cost allocations in real time.

Kyle Campos, chief technology and product officer for CloudBolt, said the alliance will make it simpler for a centralized IT team to keep track of Kubernetes costs alongside all the other IT platforms being similarly managed. Rather than merely identifying an anomaly, IT teams need tools that allow them to proactively address that issue using an automation framework much like would resolve any other IT incident, he added.

In theory, Kubernetes makes it feasible to scale consumption of IT infrastructure resources up and down as needed. In practice, too many developers still over-provision IT resources even when they deploy applications on a Kubernetes cluster. Often, it’s simply because they are concerned their application may one day not have access to, say, enough memory. That creates an issue for IT teams: Much of the IT infrastructure provisioned is wasted.

IT teams, of course, should monitor the consumption of IT infrastructure, but most of the staff are too busy to monitor individual clusters. In fact, a recent survey conducted by the Cloud Native Computing Foundation (CNCF) found that 69% of respondents work for organizations that either do not monitor Kubernetes spending at all or rely on monthly estimates.

FinOps has emerged a methodology for optimizing cloud computing costs by proactively monitoring the consumption of IT infrastructure. Rather than having disparate procurement teams working in silos to identify and approve costs, FinOps calls for bringing together business, financial and IT professionals to reduce costs by establishing best cloud computing practices.

The challenge these teams face, however, is that cloud service providers all use different nomenclature to describe similar services. A FinOps Foundation effort is underway, working to define a FinOps Open Cost and Usage Specification (FOCUS) that promises to normalize cloud cost and usage data, but it may be a while before that framework is fully defined.

It’s not clear how quickly organizations are embracing FinOps as a best practice for containing cloud computing costs. In the face of ongoing economic headwinds, pressure on IT teams to reduce the total cost of IT is rising. In many cases, developers have been allowed to provision cloud resources on demand with minimal supervision, resulting in spiraling costs as more workloads are deployed in cloud computing environments. Without any form of monitoring, organizations remain unaware of actual spend and over-allocated cloud resources. Calculating Kubernetes costs using manual methods is time-consuming and often inaccurate.

Hopefully, there soon will come a day when cost metrics surface alongside the application performance metrics in every platform that DevOps teams are already using. The overall goal should be to shift as much cost control as far left toward application developers as possible, noted Campos.

In the meantime, as IT organizations increasingly employ multiple cloud platforms to run different classes of workloads, understanding the true cost of choosing to use one cloud over another is likely to become only that much more challenging.

Photo credit: DWNTWN Co. on Unsplash