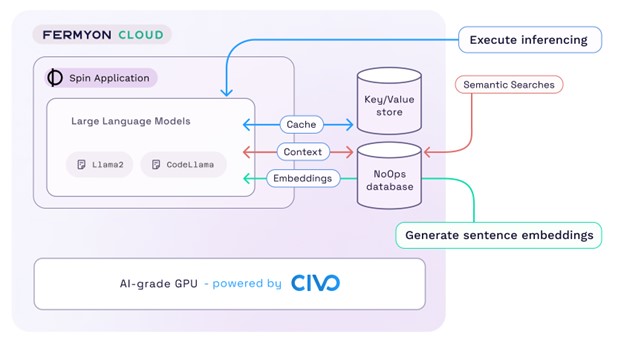

Fermyon Technologies has extended the reach of its platform-as-a-service (PaaS) environment for building and deploying WebAssembly (Wasm) applications to include a serverless computing framework for invoking artificial intelligence (AI) models.

Company CEO Matt Butcher said Fermyon Serverless AI is unique because it only invokes expensive graphical processor unit (GPU) resources for the duration of the inferencing request. That approach also substantially reduces the amount of energy required to provide that capability, he added.

As part of that effort, Fermyon has also partnered with Civo, a provider of cloud computing services, to make it less expensive to access the GPUs needed to build and run those AI models in a way that provides 50 millisecond cold start times.

Civo CEO Mark Boost said given the cost of building AI models, many organizations are now looking beyond hyperscalers at, for example, the Kubeflow framework running on Kubernetes clusters to build and deploy AI models at a substantially lower cost. At the same time, Fermyon is encouraging the development of those applications by including Fermyon Serverless AI with the free tier of its service.

Wasm was originally developed five years ago by the World Wide Web Consortium (W3C) to create a common format for browsers executing JavaScript code, and is now starting to be used to rapidly build lighter-weight applications that can be deployed on any server platform. Wasm also provides an alternative approach to application security. Existing approaches to building applications rely on aggregating software components that tend to lack distinct boundaries between them. As a result, it becomes relatively simple for malware to infect all the components of an application. In contrast, Wasm code runs in a sandboxed environment that isolates execution environments to eliminate the ability of malware to laterally move across an application environment.

In effect, Wasm offers the promise of being able to write an application once and securely deploy it anywhere. Fermyon Technologies extends that capability by providing a PaaS service to reduce the infrastructure required to build and deploy these applications, which now includes a means to access AI models by invoking APIs via a serverless computing framework.

It’s not clear how much Wasm and AI application development will be linked, but as organizations consider their overall application development strategy going forward, many of them will be more willing to consider emerging technologies that are gaining enough momentum today to continue to be relevant years from now as tools and platforms continue to evolve.

In the meantime, organizations of all sizes will soon be looking for ways to meld the DevOps workflows used to build applications today with the machine learning operations (MLOps) workflows that data scientists employ to construct AI models. The challenge, of course, is the cultural divide between DevOps teams building and deploying modern cloud-native applications and data science teams building models is substantial. Cloud computing frameworks provide a mechanism to enable organizations to enable these teams to collaborate while still preserving the benefits that accrue from maintaining a separation of engineering concerns that also enables both teams to keep out of each other’s way.