Rafay Systems is making it simpler to build and deploy artificial intelligence (AI) applications on Kubernetes clusters by adding support for graphical processing units (GPUs) to its management platform.

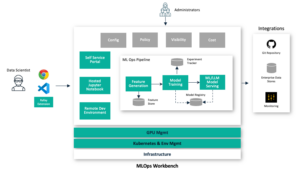

Additionally, the company is adding an AI Suite to its portfolio that provides data scientists and developers with pre-configured workspaces and pipelines based on a set of best machine learning operations (MLOps) practices.

Rafay Systems CEO, Haseeb Budhani, said the goal is to make it simpler for IT teams to deploy frameworks such as Kubeflow to build and deploy AI applications in Kubernetes environments based on the available GPU resources that can be dynamically allocated and time-sliced as needed.

In addition, Rafay has embedded compliance and governance tools to enable organizations to restrict access and apply prompt and cost controls to AI application development. That latter capability is especially critical given the high cost of acquiring or leasing GPUs in the cloud, noted Budhani.

Rafay is also investing in building its co-pilot using generative AI platforms to make it simpler to manage Kubernetes environments, Budhani added.

Platform-as-a-Service Environment

Initially focused on optimizing consumption of Kubernetes clusters, the platform provided by Rafay is evolving into a platform-as-a-service (PaaS) environment that can be deployed on-premises or in the cloud. Those capabilities are now being extended by adding support for Jupyter Notebooks and Virtual Studio Code (VSCode) tools to provide integrations with integrated developer environments (IDEs) to make it simpler for platform engineering teams to configure and manage self-service portals for developers and data scientists.

Kubernetes has evolved into a de facto standard for building and deploying AI applications. However, many organizations are still looking for ways to make the platform more accessible to developers and data scientists. At the same time, the open-source Kubernetes community continues to invest in providing capabilities such as scheduling management for batch-oriented applications.

There are alternatives such as SLURM that many organizations with high-performance computing (HPC) platforms have already invested in. As such, Rafay supports both Kubernetes and SLURM as organizations prefer, said Budhani.

It’s still early days so far as the building and deploying of AI applications is concerned but it’s already apparent that IT teams are taking on a larger role. Initially once seen as the purview of a data science team, more organizations are now tasking IT teams with managing the infrastructure needed to train and deploy AI models, noted Budhani.

It may be a while before organizations fully operationalize AI but as advances continue to be made the rate at which AI applications are built and deployed will accelerate. The challenge facing IT teams is managing that process at scale. Most organizations struggle to keep pace with existing demands for faster application development. The addition of AI models to the software development lifecycle will require software engineering teams to substantially rework workflows, to add support for essentially a new type of software artifact that just like any other will need to be continuously updated.